It’s helpful to explain how comfyUI started and what it has evolved into. One of the best things about github and open source projects in general, is that you can go back in time to when a project launched and compare it to all of the progress they’ve made.

So, what is comfyui?

Brief history of comfyui

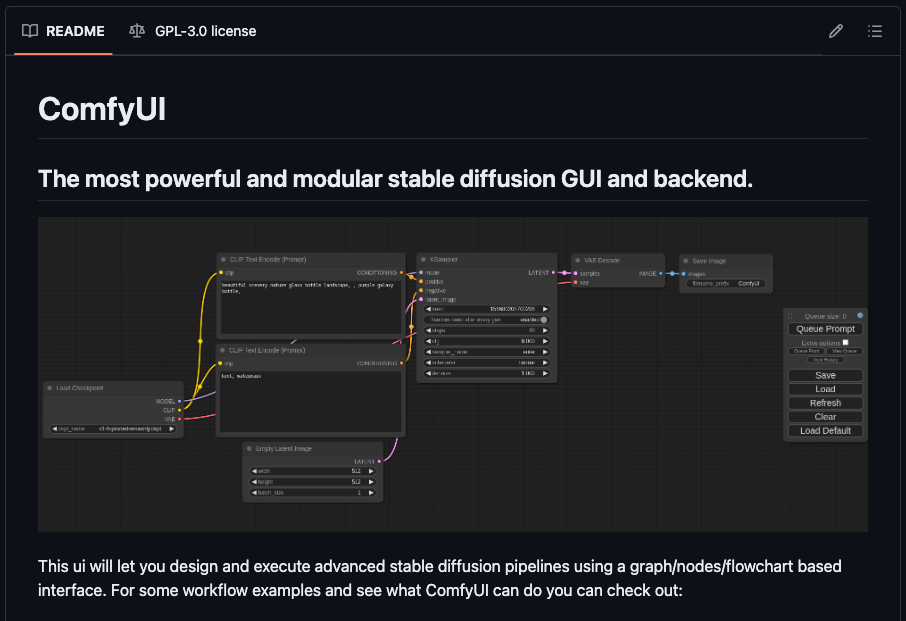

This is from the repo’s initial commit on January 16, 2023.

ComfyUI is a powerful and modular stable diffusion GUI.

For beginners, like me, lets break up the introductory statement to get a better understanding of how comfyUI started. Then we’ll fast forward to what comfyUI is today.

If you are not familiar with AI tools and more specifically AI image generation tools, this should be helpful.

Stable Diffusion is a text to image AI model. Stable Diffusion and other text to image models take input in the form of text, also known as a prompt and generate an image based on the input.

You have probably heard of a few text to image models.

DALL-E is OpenAI’s text-to-image model, there is also Firefly by Adobe, Imagen by Google and Midjourney. What’s special about Stable Diffusion is that it is open source. All of the other text to image generators mentioned above are closed source.

Modular as used in the comfyUI introduction, means there are components designed to be reused. Similar to modular homes all fitting around a standard size, modular components within comfyUI are meant to be reused.

So with all of the components of the introductory sentence broken down, we get a definition of comfyUI as a powerful, re-usable, graphical user interface for generating images from text.

ComfyUI gives you a graphical, aka point and click interface for interacting with stable diffusion.

If you have used any AI tools you probably know that most of them require you to operate them either from a command prompt or code editor.

If you haven’t used any AI tools, especially open source ones, know that you will need advanced computer skills. Including, knowledge of the command prompt.

That’s where comfyUI started. Now it’s still a graphical user interface for interacting with AI image generation tools, but it is also much more.

What is comfyUI now

As an open source project, with over 2,500 commits, the project now includes a bunch of other features that make it one of the more exciting developments in the AI ecosystem.

One of the most exciting features is the development of workflows. Remember from the initial commit, comfyUI was built to be modular. One of the benefits of this modular structure is that the components were made to be reused.

Using comfyUI you can organize each of your processes into different nodes. You can tie these nodes together easily using the graphical interface. Once completed you have a workflow. That functionality alone is pretty cool, what’s even cooler is the fact that you can then export and import different workflows.

Having a graphical interface for interacting with these image models is a huge step forward when compared to having to interact through the command prompt.

Now, you can also import workflows, which removes even more complexity from the process of using text to image generators.

What’s in the future

That’s where comfyUI is today, but I can see the tool growing into other areas as well. The nodes and modules system also makes sense in the world of AI agents and processes. It is not outside of the realm of possibility that comfyUI becomes the standard GUI for more AI tools.

Leave a Reply